Improving Multimodal Object Detection with Individual Sensor Monitoring

Dec 5, 2022· ,,·

0 min read

,,·

0 min read

Christopher Kuhn

Markus Hofbauer

Goran Petrovic

Eckehard Steinbach

Image credit: IEEE

Image credit: IEEEAbstract

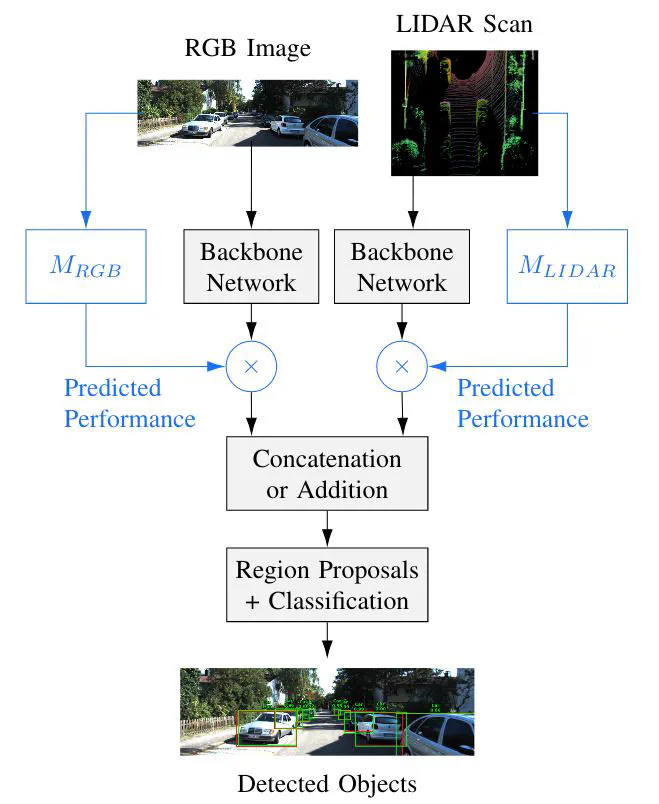

Multimodal object detection fuses different sensors such as camera or LIDAR to improve the detection performance. However, individual sensor inputs can also be detrimental to a system, for example when sun glare hits a camera. In this work, we propose to monitor each sensor individually to predict when an input would lead to incorrect detections. We first train one detection network for each sensor separately, using only that sensor as input. Then, we record the performance for each single-sensor network and train an introspective performance prediction network for each sensor. Finally, we train a multimodal fusion network where we weight the impact of each sensor with its predicted performance. This allows us to dynamically adapt the fusion to reduce the influence of harmful sensor readings based only on the current data. We apply the proposed concept to the state-of-the-art AVOD architecture and evaluate on the KITTI data set. The proposed sensor monitoring system improves the mean intersection-over-union performance by 4.6%. For inputs with a low predicted performance, the proposed approach outperforms the state of the art by over 10%, demonstrating the potential of using individual sensor monitoring to react to problematic input. The proposed approach can be applied to any fusion network with two or more sensors and could also be used for classification or segmentation tasks.

Type

Publication

In 24nd IEEE International Symposium on Multimedia