Preprocessor Rate Control for Adaptive Multi-View Live Video Streaming Using a Single Encoder

Jan 12, 2022· ,,,·

0 min read

,,,·

0 min read

Markus Hofbauer

Christopher Kuhn

Goran Petrovic

Eckehard Steinbach

Image credit: IEEE

Image credit: IEEEAbstract

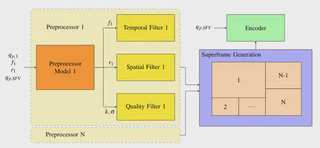

Currently, an increasing number of technical systems are equipped with multiple cameras. Limited by cost and size, they are often restricted to a single hardware encoder. The combination of all views into a single superframe allows for streaming all camera views at the same time, but it prevents individual rate/quality adaptations on those camera views. We propose a preprocessing filter concept that allows for individual rate/quality adaptation while using a single encoder. Additionally, we create a preprocessor model that estimates the required preprocessing filter parameters from the specified encoding parameters. This means our approach can be used with any existing multi-view adaptation scheme designed for controlling multiple encoders. We design both an analytical and a Machine Learning-based bitrate model. Because both models perform equally well, we suggest using either one as the core part of our preprocessor model. Both models are specifically designed for estimating the influence of the quantization parameter, frame rate, frame size, group of pictures length, and a Gaussian low-pass filter on the video bitrate. Furthermore, the rate models outperform state-of-the-art bitrate models by at least 22% regarding the overall root mean square error. Our bitrate models are the first of their kind to consider the influence of a Gaussian low-pass filter. We evaluate the preprocessing approach by streaming six camera views in a teledriving scenario with a single encoder and compare it to using six individual encoders. The experimental results demonstrate that the preprocessing approach achieves bitrates similar to the individual encoders for all views. While achieving a comparable rate and quality for the most important views, our approach requires a total bitrate that is 50% smaller than when using a single encoder approach without preprocessing.

Type

Publication

In IEEE Transactions on Circuits and Systems for Video Technology